- Activation of certain user function after hotword detection

- Project status: prototype

See real examples:

- https://blog.aspiresys.pl/technology/building-jarvis-nlp-hot-word-detection/

- https://picovoice.ai/

- https://github.com/Kitt-AI/snowboy/

Use for instance https://github.com/ekalinin/github-markdown-toc:

- Git clone this repository to your Raspberry Pi link.

- Create Python virtual environment and install packages from requirements.txt

$ python3 main.py

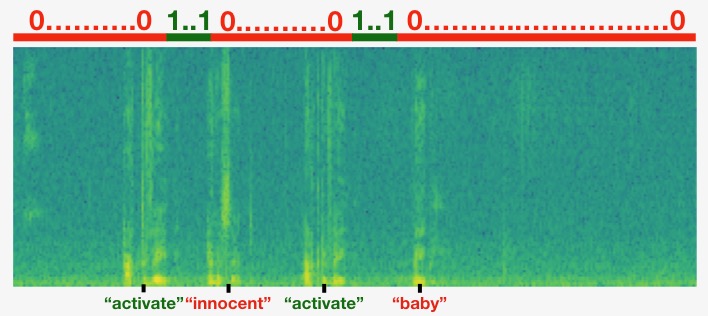

Below is an example of a Mel Spectrogram, model prediction and true labels for a training example from the dataset. Dev set accuracy 97.7%. More images could be found in images directory.

The idea of this project was based on the real world examples, such as Alexa, Siri, Google Assistant. Also, additional knowledge was taken from Coursera

See requirements.txt

Additional setup notes

This project is part of my bachelor's degree project. The idea of not using end-to-end solution for a simple voice assistant, and filter request by the hotword was taken from real world example which were listed above. Data preprocessing step was significantly modified to improve model performance. In my case usage of Mel Spectrogram as an input data preprocessor gave me up to 8% accuracy improvement (from 89% on the dev set, up to 97% on the dev set).

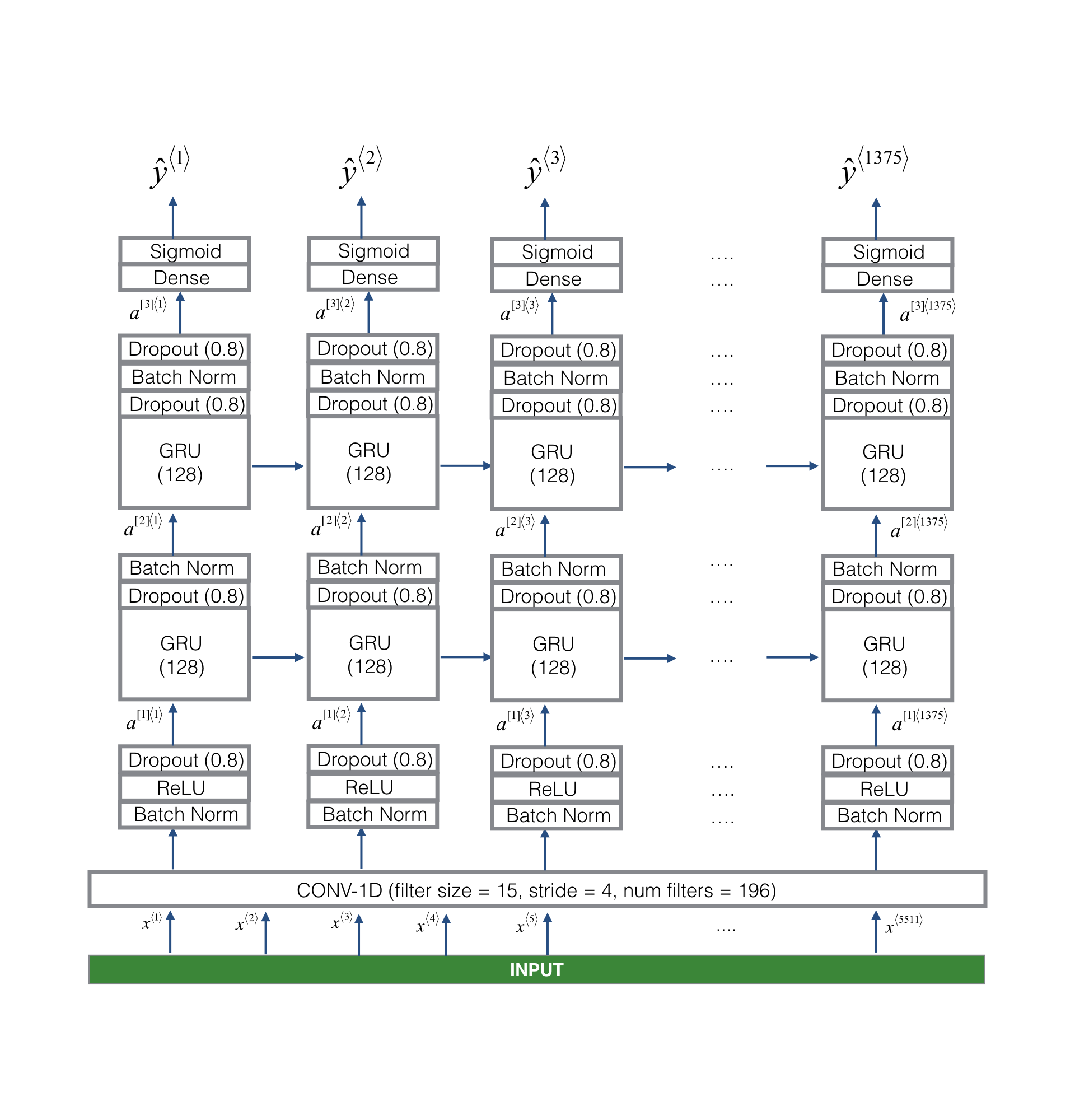

The model architecture was taken from Coursera final course in series about Deep Learning from Andrew Ng. The only difference is the amount of input and output nodes. Below you can find the complete architecture of model:

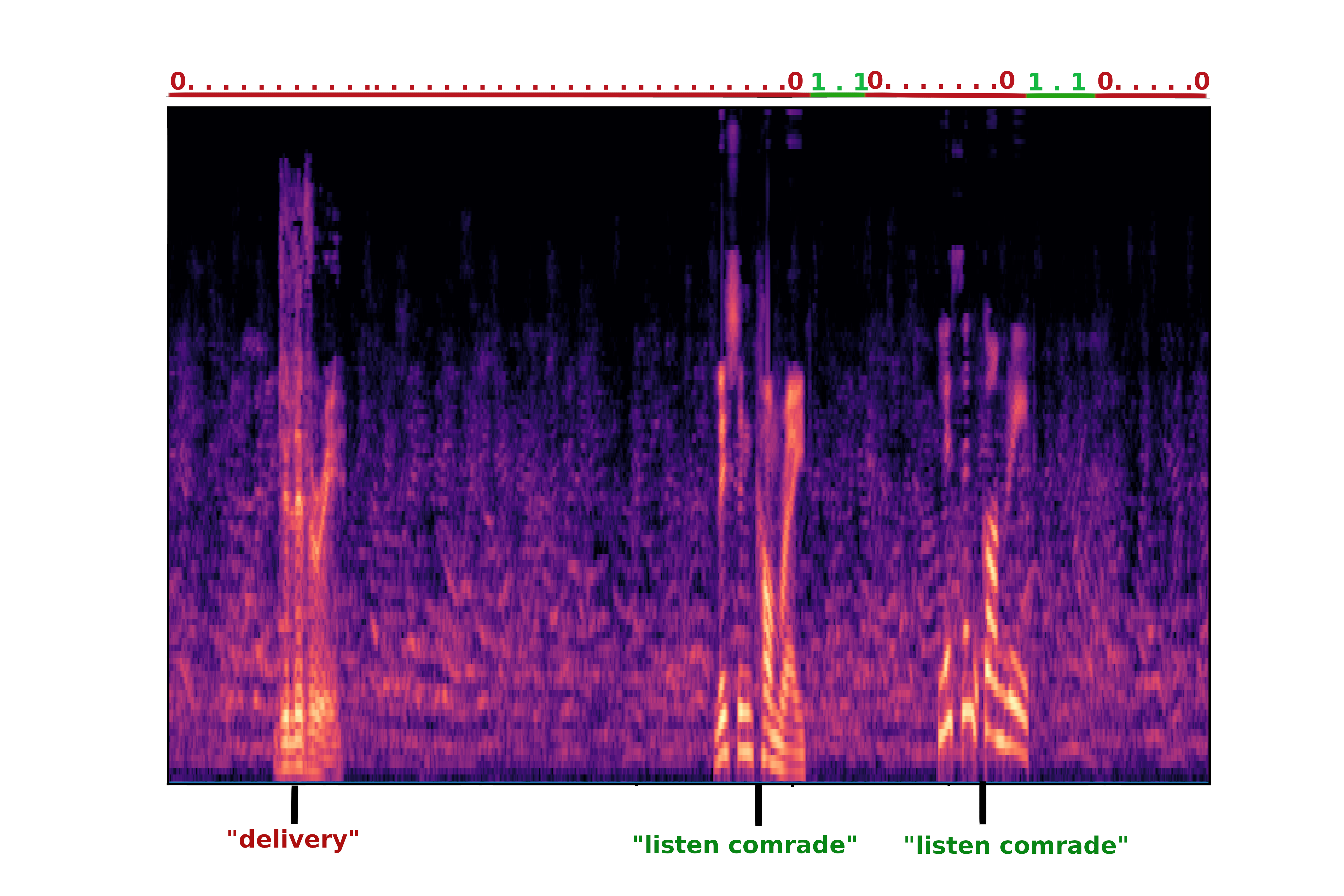

The dataset was generated with the help of Google Cloud Text-to-Speech. Using Wavenets I gathered about 50 examples of 1 second audio files with trigger word phrase. Background generation was created by cutting and converting Youtube videos such as this one. Half of the trigger words were recorded by myself in Jupyter Notebook scripts which will be posted in a separate directory. Labels were marked after every trigger word. Below are examples of such marking (the first one was taken from Coursera Sequence course, and the second one was created by myself):

From the images above you may see that for the trigger word I chose the phrase "Listen comrade". The model should make the distinct notification sound if the trigger word was spoken by someone.