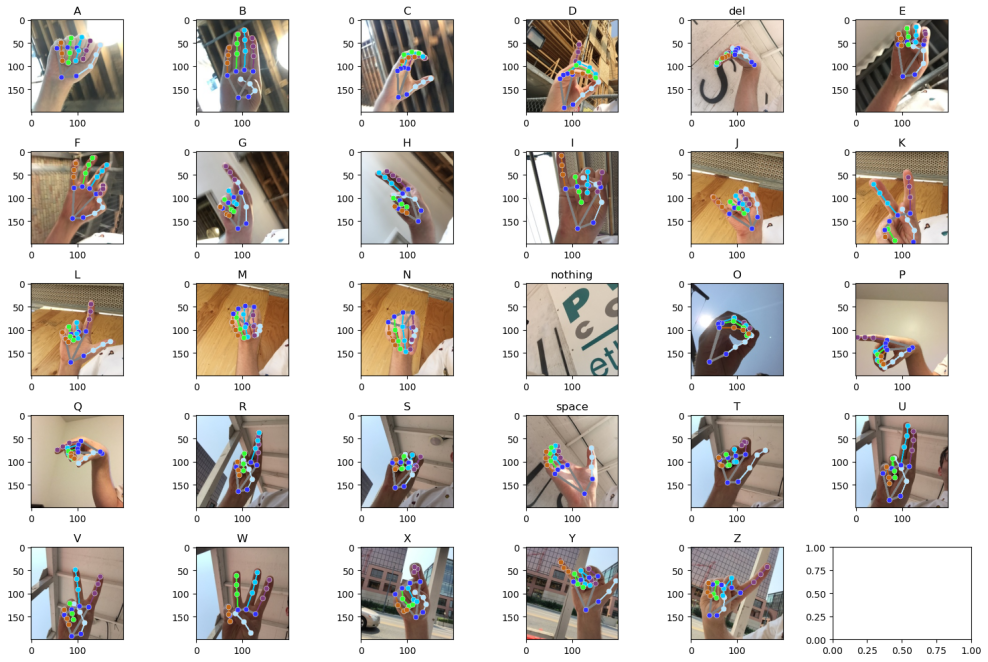

The ASL Sign Language Detector is a powerful tool designed to recognize and interpret American Sign Language gestures.In this project, we generated hand landmarks using Mediapipe. Subsequently, we trained the model using the Support Vector Machine (SVM) algorithm provided by scikit-learn. We Used this Kaggle Dataset to train the model

- Real-time Recognition: The detector is capable of recognizing ASL signs in real-time, making it suitable for live interactions.

- User-Friendly Interface: The application comes with a user-friendly interface, making it accessible to users of all backgrounds.

Click here ![]() to Detect the Sign Language

to Detect the Sign Language

-

Click the Start Button to turn on the camera and ensure there is enough light

-

Show some ASL Signs For it to Detect

- Click Stop to Turn off the Camera.

Clone the project

git clone https://github.com/aashishops/ASL-DetectorGo to the project directory

cd ASL-DetectorInstall Prerequisits

pip install -r requirements.txt Run The app on Streamlit

streamlit run app.pyOR Run The app on OpenCV

python asl_gesture.py-

Hand Tracking: It employs hand tracking to locate and track the user's hand gestures.

-

Gesture Recognition: A machine learning model trained on a dataset of ASL signs recognizes and interprets the gestures

The landmarks were generated using the Mediapipe library.

These landmark coordinates are collected as dataset in Dataset.csv for training and classification.

The Dataset was trained on Support Vector Machine Model using Scikit learn.