About • Pipeline • Scenario • Prerequisites • Set-up • Installation • Airflow Interface • Pipeline Task by Task • Learning Resources • Encountered difficulty • Next Steps

Educational project on how to build an ETL (Extract, Transform, Load) data pipeline, orchestrated with Airflow.

The data is extracted from Twitter. It is then transformed/processed with Python and loaded/stored in MongoDB and in PostgreSQl.

MongoDB is used as a Database in which json files are stored. Before a load, the database is cleared.

PostgreSQL is used as Database with two tables : users and tweets. Data are not cleared.

As a Twitter user, we face the problem of having to manually search for tweets related to a specific domain or user. With a dashboards showing multiple or single tweets related to multiple or single domain will be a significant improvement.

- Pipeline would be run every hour

due to the Tweeter API limitto extract the latest tweets.

- Create a Twitter Developer account.

MongoDB

- Register to MongoDB Atlas.

- Create a Database etl and a Collection News.

- Ensure that network access is enabled.

PostgreSQL:

- Create a localhost Database named

twitterETL.

Airflow:

- Setup Variables and connection

- Schema : Represent the PostgreSQl Database Name

Build the Docker images and start the containers with:

docker build . --tag extending_airflow:2.5.1

- Refer to the Airflow Installation before next step.

Run Airflow:

docker-compose up -d

After everything has been installed, you can check the status of your containers (if they are healthy) with:

docker ps

Note: it might take up to 30 seconds for the containers to have the healthy flag after starting.

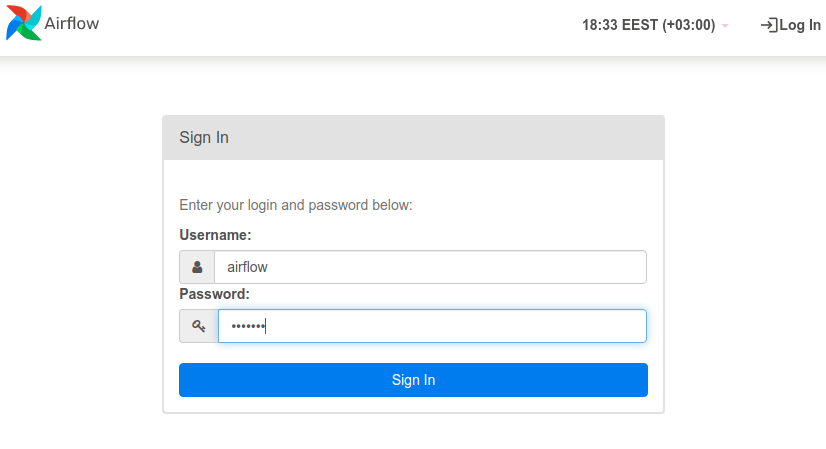

You can now access the Airflow web interface by going to http://localhost:8080/. If you have not changed them in the docker-compose.yml file, the default user is airflow and password is airflow:

After signing in, the Airflow home page is the DAGs list page. Here you will see all DAGs.

Note: If you update the code in the python DAG script, the airflow DAGs page has to be refreshed

You can access a DAG :

- PostgreSQL

- MongoDB

Create PostgreSQl table users and tweets if it does not exist.

Clear MongoDB collection.

Extract tweets from Twitter API and transform them into a json file, using only needed fields.

TWEET_INFO

- text

- created_at

USER_INFO

- username

- followers_count

- following_count

- created_at

- description

Used in PostgreSQl DAG. Deleting ' character in the text and description field.

Note: ' is a reserved character in PostgreSQl.

Load the processed data.

- MongoDB

-

Postgres:

- Users

- Tweets

- Airflow installation and setup

- Scrape data from Amazon website, as the first idea was to follow prices evolution of a product.