This code was tested on Python 3.8 with Cuda 12.1 and PyTorch 2.3.

- To setup up the environemnt, simply create a Conda environemnt by running:

conda env create -f environment.yml

-

Follow the instructions for parts 2,3 in MDM's README.md to download the required files for Motion-Diffusion-Model code located in

external/MDM. -

You can download some examples of generated motions to

workspaceby running:

gdown "1IdaCPpRWrmRX5AVXXUXymwFVHXt2CNnW&confirm=t"

unzip workspace.zip

Please refer to getting_started.ipynb.

Parts of this code base are adapted from MDM, Detectron2, and MvDeCor.

If you use this code or parts of it, please cite our paper:

@inproceedings{eldesokey2024latentman,

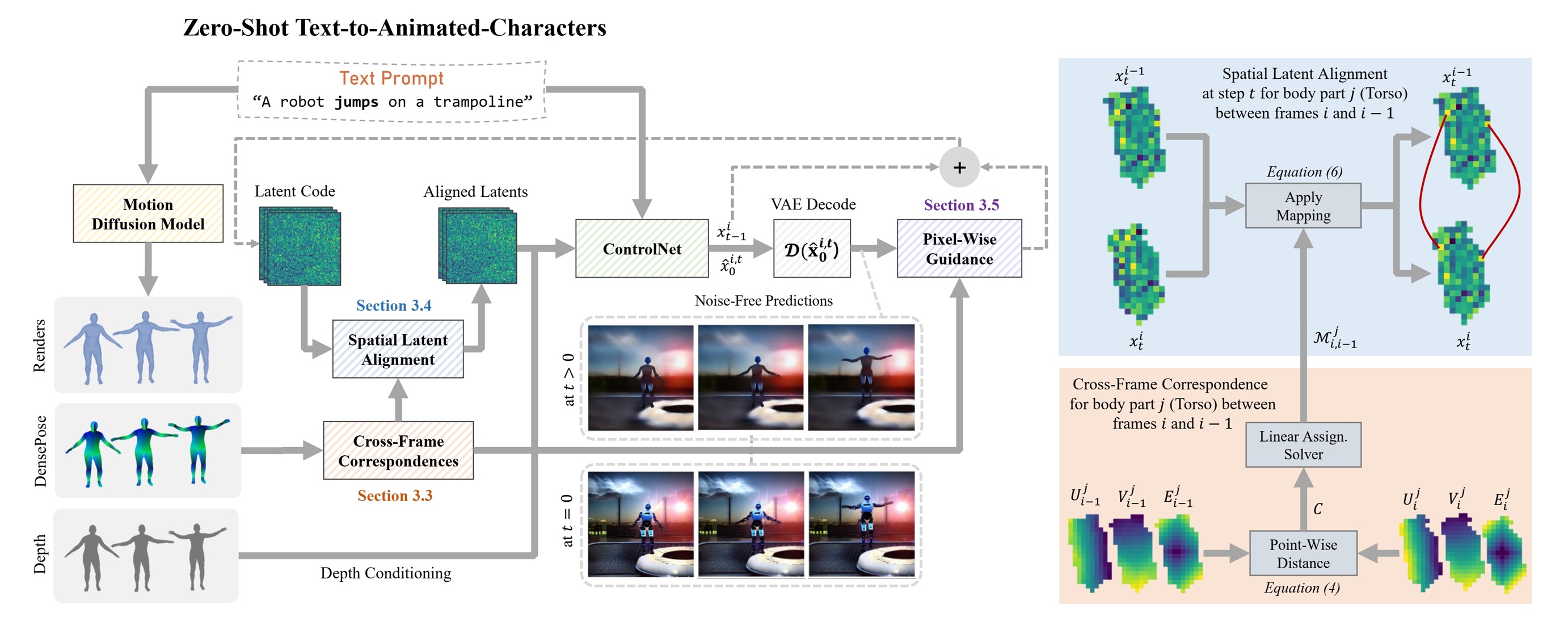

title={LATENTMAN: Generating Consistent Animated Characters using Image Diffusion Models},

author={Eldesokey, Abdelrahman and Wonka, Peter},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7510--7519},

year={2024}

}