facebook: https://www.facebook.com/codemakerz

email: martandsays@gmail.com

In this tutorial we will try to implement a basic data pipeline using S3 bucket, lambda functions & kinesis data stream.

Every time you upload a new file to bucket, lambda function(producer.js) will notice that and push the content of that file to kinesis data stream which can be used further by another lambda function(consumer.js).

- S3 Bucket

- AWS Lambda functions (node.js sdk)

- Kinesis data stream

- Cloudwatch

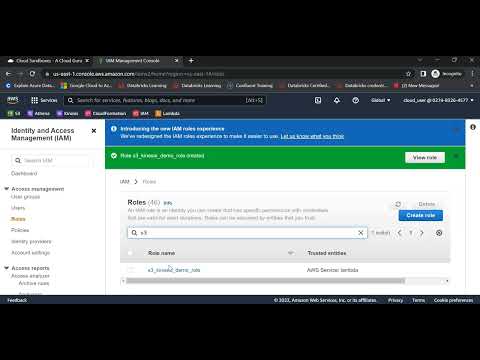

- Create custom role for the demo

- Create 2 lambda functions. 1 Producer & 1 Consumer (Consumer1)

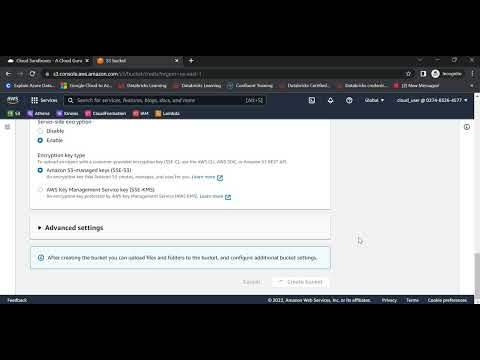

- Create S3 bucket -> add lambda function to configuration

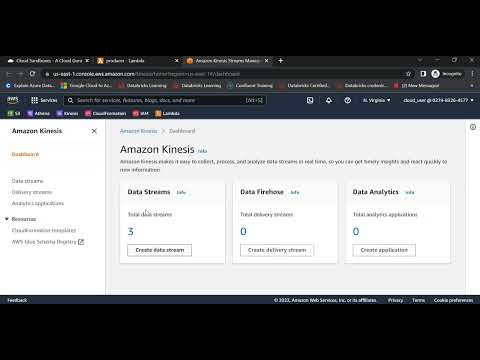

- Create Kinesis Stream instance

- Edit producer code - it will get S3 object and push to kinesis data stream

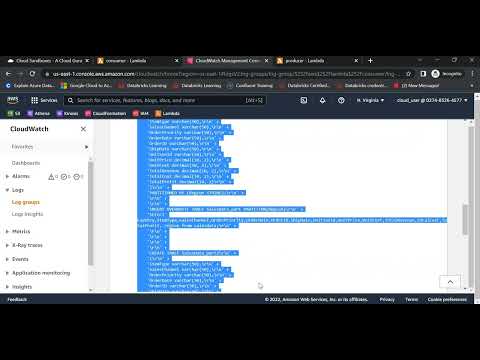

- Edit consumer code - it will read the kinesis stream. you can do any custom activity like save to db or datalake. for demo purpose I will log the data

- Add trigger to consumer lambda function

- Test the pipeline. Upload file and check the cloudwatch logs

https://www.youtube.com/watch?v=8EOS26MqPfU&list=PLUD3Fp3WhxlNv0euAsABB3-wZRIzu1o6I

In this video, we will create a custom IAM role for our application.

In this tutorial, we will create 2 lambda functions (producer.js, consumer.js) & AWS S3 bucket. We will use node js sdk for the development.

Here, we will create kinesis data stream instance & add code to our producer & consumer js.

This is the final step, where we will test our data pipeline & monitor cloudwatch log & kinesis monitoring.