-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge branch 'main' into dependabot/maven/org.codehaus.mojo-versions-…

…maven-plugin-2.17.1

- Loading branch information

Showing

54 changed files

with

427 additions

and

96 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,58 @@ | ||

| # This workflow will build a Java project with Maven, and cache/restore any dependencies to improve the workflow execution time | ||

| # For more information see: https://docs.github.com/en/actions/automating-builds-and-tests/building-and-testing-java-with-maven | ||

|

|

||

| name: Java CI with Maven | ||

| on: | ||

| push: | ||

| branches: [ "main" ] | ||

| pull_request: | ||

| branches: [ "main" ] | ||

| schedule: | ||

| - cron: "0 2 * * 1-5" | ||

| concurrency: | ||

| group: ${{ github.ref }} | ||

| cancel-in-progress: true | ||

| jobs: | ||

| build: | ||

| runs-on: ubuntu-latest | ||

| steps: | ||

| - uses: actions/checkout@v4 | ||

| with: | ||

| submodules: recursive | ||

| - name: Set up JDK 17 | ||

| uses: actions/setup-java@v4 | ||

| with: | ||

| java-version: '17' | ||

| distribution: 'temurin' | ||

| cache: maven | ||

| - name: Fail on whitespace errors | ||

| run: git show HEAD --check | ||

| - name: Run Spotless | ||

| run: mvn -s .travis.settings.xml -Dgithub.username=${{ github.actor }} -Dgithub.password=${{ secrets.GITHUB_TOKEN }} spotless:check -B | ||

| - name: Cache Pip dependencies | ||

| uses: actions/cache@v4 | ||

| with: | ||

| path: ~/.cache/pip | ||

| key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }} | ||

| restore-keys: | | ||

| ${{ runner.os }}-pip- | ||

| - name: Install global requirements | ||

| run: pip3.10 install -r requirements.txt | ||

| - name: Run Black | ||

| run: black --fast --check --extend-exclude \/out . | ||

| - name: Install test requirements | ||

| run: pip3.10 install -r edu.cuny.hunter.hybridize.tests/requirements.txt | ||

| - name: Install with Maven | ||

| run: mvn -U -s .travis.settings.xml -Dgithub.username=${{ github.actor }} -Dgithub.password=${{ secrets.GITHUB_TOKEN }} -Dlogging.config.file=\${maven.multiModuleProjectDirectory}/logging.ci.properties -DtrimStackTrace=true -Dtycho.showEclipseLog=false install -B -q -DskipTests=true | ||

| - name: Print Python 3 version. | ||

| run: python3 --version | ||

| - name: Print Python 3.10 version. | ||

| run: python3.10 --version | ||

| - name: Clone our fork of PyDev | ||

| run: | | ||

| mkdir "$HOME/git" | ||

| pushd "$HOME/git" | ||

| git clone --depth=50 --branch=pydev_9_3 https://github.com/ponder-lab/Pydev.git | ||

| popd | ||

| - name: Test with Maven | ||

| run: mvn -U -s .travis.settings.xml -Dgithub.username=${{ github.actor }} -Dgithub.password=${{ secrets.GITHUB_TOKEN }} -Dlogging.config.file=\${maven.multiModuleProjectDirectory}/logging.ci.properties -DtrimStackTrace=true -Dtycho.showEclipseLog=false -B verify -Pjacoco coveralls:report |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,27 +1,53 @@ | ||

| # Hybridize-Functions-Refactoring | ||

|

|

||

| [](https://app.travis-ci.com/ponder-lab/Hybridize-Functions-Refactoring) [](https://coveralls.io/github/ponder-lab/Hybridize-Functions-Refactoring?branch=main) [](https://github.com/khatchadourian-lab/Java-8-Stream-Refactoring/raw/master/LICENSE.txt) [](https://www.ej-technologies.com/products/jprofiler/overview.html) | ||

| [](https://github.com/ponder-lab/Hybridize-Functions-Refactoring/actions/workflows/maven.yml) [](https://coveralls.io/github/ponder-lab/Hybridize-Functions-Refactoring?branch=main) [](https://github.com/ponder-lab/Hybridize-Functions-Refactoring/raw/master/LICENSE) [](https://www.ej-technologies.com/products/jprofiler/overview.html) | ||

|

|

||

| ## Introduction | ||

|

|

||

| Refactorings for optimizing imperative TensorFlow clients for greater efficiency. | ||

| <img src="https://raw.githubusercontent.com/ponder-lab/Hybridize-Functions-Refactoring/master/edu.cuny.hunter.hybridize.ui/icons/icon.drawio.png" alt="Icon" align="left" height=150px /> Imperative Deep Learning programming is a promising paradigm for creating reliable and efficient Deep Learning programs. However, it is [challenging to write correct and efficient imperative Deep Learning programs](https://dl.acm.org/doi/10.1145/3524842.3528455) in TensorFlow (v2), a popular Deep Learning framework. TensorFlow provides a high-level API (`@tf.function`) that allows users to execute computational graphs using nature, imperative programming. However, writing efficient imperative TensorFlow programs requires careful consideration. | ||

|

|

||

| This tool consists of automated refactoring research prototype plug-ins for [Eclipse][eclipse] [PyDev][pydev] that assists developers in writing optimal imperative Deep Learning code in a semantics-preserving fashion. Refactoring preconditions and transformations for automatically determining when it is safe and potentially advantageous to migrate an eager function to hybrid and improve upon already hybrid Python functions are included. The approach utilizes the [WALA][wala] [Ariadne][ariadne] static analysis framework that has been modernized to TensorFlow 2 and extended to work with modern Python constructs and whole projects. The tool also features a side-effect analysis that is used to determine if a Python function is safe to hybridize. | ||

|

|

||

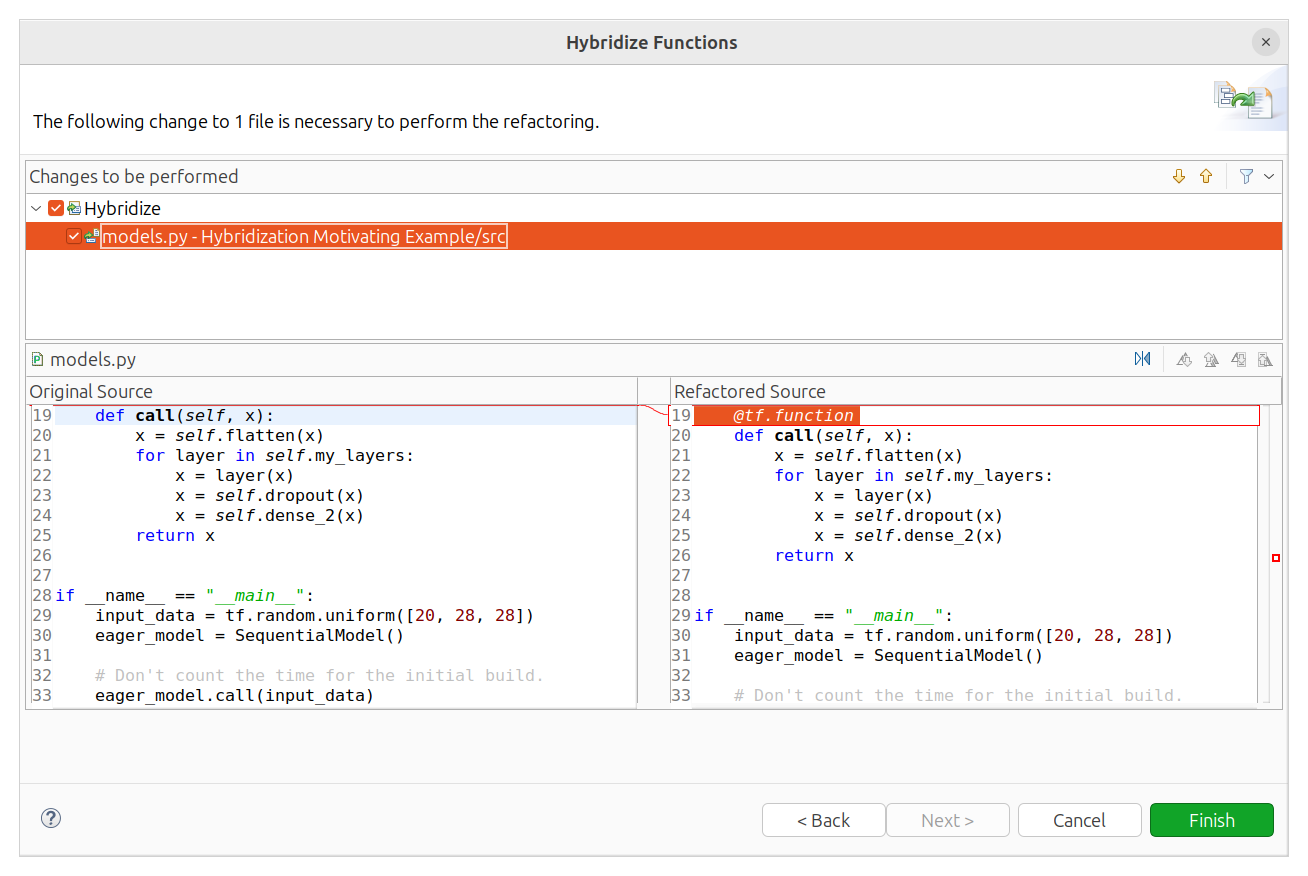

| ## Screenshot | ||

|

|

||

| ## Demonstration | ||

|  | ||

|

|

||

| ## Usage | ||

|

|

||

| The refactoring can be run in two different ways: | ||

|

|

||

| 1. As a command. | ||

| 1. Select a Python code entity. | ||

| 1. Select "Hybridize function..." from the "Quick Access" dialog (CTRL-3). | ||

| 1. As a menu item. | ||

| 1. Right-click on a Python code entity. | ||

| 1. Under "Refactor," choose "Hybridize function..." | ||

|

|

||

| Currently, the refactoring works only via the package explorer and the outline views. You can either select a code entity to optimize or select multiple entities. In each case, the tool will find functions in the enclosing entity to refactor. | ||

|

|

||

| ### Update | ||

|

|

||

| Due to https://github.com/ponder-lab/Hybridize-Functions-Refactoring/issues/370, only the "command" is working. | ||

|

|

||

| ## Installation | ||

|

|

||

| Coming soon! | ||

|

|

||

| ### Update Site | ||

|

|

||

| https://raw.githubusercontent.com/ponder-lab/Hybridize-Functions-Refactoring/main/edu.cuny.hunter.hybridize.updatesite | ||

|

|

||

| ### Eclipse Marketplace | ||

|

|

||

| Coming soon! | ||

|

|

||

| ## Contributing | ||

|

|

||

| For information on contributing, see [CONTRIBUTING.md][contrib]. | ||

|

|

||

| [wiki]: https://github.com/ponder-lab/Java-8-Stream-Refactoring/wiki | ||

| [wiki]: https://github.com/ponder-lab/Hybridize-Functions-Refactoring/wiki | ||

| [eclipse]: http://eclipse.org | ||

| [contrib]: https://github.com/ponder-lab/Hybridize-Functions-Refactoring/blob/main/CONTRIBUTING.md | ||

| [pydev]: http://www.pydev.org/ | ||

| [wala]: https://github.com/wala/WALA | ||

| [ariadne]: https://github.com/wala/ML |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,2 +1,3 @@ | ||

| bin | ||

| bin/ | ||

| /target/ | ||

| lib/ |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -2,6 +2,4 @@ | |

|

|

||

| public enum PreconditionSuccess { | ||

| P1, P2, P3, | ||

| // P4, | ||

| // P5 | ||

| } | ||

Oops, something went wrong.